When people talk about AR or XR, they often mix them up – and it’s easy to see why. The lines between these technologies blur fast. But there’s a simple way to think about it: AR adds digital stuff to your world. XR covers the whole spectrum – from subtle overlays to fully immersive virtual experiences.

That spectrum includes AR, VR, and MR – and they each shape how we interact with data, games, workspaces, or even other people. Whether you’re browsing furniture through your phone or training in a virtual lab, understanding what separates AR from XR helps make sense of where all this tech is heading.

Understanding the Spectrum of Realities

Before diving into comparisons, it helps to understand what each term actually means. XR, AR, VR, and MR all sit on a scale – a “virtuality continuum” – defining how much of our real world is replaced, enhanced, or blended with digital content.

What is Augmented Reality (AR)?

Augmented Reality is where the real and digital worlds meet – but you’re still anchored in reality. Instead of replacing your surroundings, AR adds a digital overlay on the real world. You’re looking at your kitchen, your office, your street – but with extra data, images, or tools layered on top.

This technology doesn’t block out your senses like VR. You’re present in the moment – but seeing more than what’s physically there. That’s the whole point: AR enhances what’s already around you.

How It Shows Up in the Real World

Augmented reality examples are already mainstream. Open Instagram or Snapchat and launch a face filter – you’re using AR. Walk through your apartment with IKEA Place to see how furniture fits before you buy it – that’s AR, too.

Smartphones and smart glasses are the usual hardware, though AR is increasingly moving into hands-free wearables. It’s already reshaping fields like retail, education, and healthcare, offering real-time interaction without disconnecting you from your environment.

What is Virtual Reality (VR)?

Virtual Reality doesn’t build on top of your world – it tosses it out and gives you a new one. Put on a headset, and suddenly your room’s gone. You’re standing on Mars. Or underwater. Or inside a quiet architectural model, walking through walls that haven’t been built yet. That full switch – from real to rendered – is what makes VR unique.

Everything you see is digital. The only thing grounding you is the headset tracking your movement, your direction, your gestures. That’s where the tech gets powerful: it gives you space to think, move, and react without the noise of the real world pressing in.

VR isn’t just for gamers. It’s used in medical simulations, engineering prototypes, military training, and increasingly, remote collaboration. A surgeon can walk through a 3D model of an organ. A team can review a virtual factory layout before the first bolt is ever tightened. That level of spatial computing changes how people design, train, and solve problems – not on paper, but from the inside out.

How It Shows Up in the Real World

The most recognizable name in VR is probably the Oculus Rift, though it’s been rebranded under Meta. It helped push home VR into the mainstream. Then there’s Beat Saber, a rhythm game that’s more exhausting than it looks – slashing neon blocks while ducking walls feels more like dance than play. Games like these show off the potential of virtual reality headsets, but the bigger picture is serious: learning environments, virtual classrooms, immersive journalism – all of them building inside spaces that don’t physically exist.

What is Mixed Reality (MR)?

Mixed Reality lives in that weird middle space – not quite here, not fully virtual. It’s what happens when the digital world doesn’t just sit on top of reality, like in AR, but actually interacts with it. MR systems understand the physical space around you and let virtual elements respond to it – and to you.

That means digital objects can hide behind real ones. They can sit on your table, cast shadows, react to lighting, or even bounce if you touch them with a controller. The technology tracks your position, your hands, your eyes. It sees what you see, and it builds on it.

It’s easy to confuse MR with AR, and sometimes companies deliberately blur the terms. But the difference between AR and XR, and even MR, comes down to how deep the interaction goes. AR adds content. MR connects it. If AR is about adding, MR is about blending – making the digital feel like part of the physical space.

How It Shows Up in the Real World

Microsoft HoloLens. Put it on, and you can pin windows to your wall, move holograms with your hands, or follow floating instructions while repairing a machine. It’s a headset, yes – but it doesn’t block your view. It adds to it in a way that reacts to your environment, letting virtual models behave like they belong in the room. That’s Mixed Reality at work. It’s less flashy than VR, but more powerful when precision matters – in labs, in factories, and in surgery rooms where hands need both data and visibility.

What is Extended Reality (XR)?

Extended Reality, or XR, is less a single technology and more a catch-all term. If AR, VR, and MR are branches, XR is the tree they grow from. It wraps them into one category, giving us a way to talk about immersive tech as a whole – without needing to draw hard lines between where one ends and the next begins.

This isn’t just a marketing term (though it often sounds like one). XR is practical shorthand, especially when the tools overlap. Some headsets can run AR one minute and flip into VR the next. Some platforms use both to offer custom levels of immersion. XR lets designers, developers, and businesses speak a common language when discussing future interfaces – without getting bogged down in acronyms.

If you’ve ever seen the phrase “XR vs AR”, it’s not really a battle – it’s a matter of scope. XR includes AR. It also includes VR and MR. The key difference between AR and XR is that AR is specific. XR is the full framework.

How It Shows Up in the Real World

XR shows up in industries where switching between realities makes sense. In healthcare, surgeons use AR for navigation, VR for training, and MR for planning. In retail, XR creates full product experiences – like trying on clothes in a virtual fitting room or visualizing furniture in a digital showroom. Even education is being reshaped by extended reality applications that blend visual, spatial, and interactive elements for deeper learning.

Whether it’s used in design, simulation, entertainment, or therapy, XR is the umbrella that makes space for flexibility – and that’s exactly why it’s gaining traction across so many sectors.

XR vs AR: Comparison Table

When people try to make sense of AR and XR, the confusion often comes down to scope. The comparison between AR and XR makes more sense when you lay the differences side by side. Whether you’re deciding what tech to build on or simply trying to understand the landscape, here’s how the two compare by key criteria.

| Feature | AR | XR |

| Definition | Overlays digital info on the real world | Encompasses AR, VR, and MR |

| Interaction with Real World | Yes | Varies by technology |

| Devices Used | Smartphones, smart glasses | VR headsets, AR/MR devices |

| Immersion Level | Partial, real world remains visible | Varies – from minimal to full immersion |

| Popular Examples | Google Lens | HoloLens, Oculus, Meta Quest |

Key Technology Components

You can’t separate immersive technology from the hardware that powers it. Whether it’s AR on a phone or XR in a full headset, the experience is only as strong as the tools behind it. From the displays you look through to the sensors that track your movements, every layer of these systems plays a role in how convincingly digital and physical worlds collide.

Devices: Headsets and Glasses

The gear says a lot about what kind of experience you’re getting. For AR, it’s mostly phones and smart glasses. The phone’s camera captures your surroundings, then overlays directions, text, or animations on top. It’s fast, familiar, and always in your pocket. Glasses like Google Glass or the newer Ray-Ban Meta versions aim to make those overlays more natural – less “look at your phone,” more “see it as you move.”

XR goes deeper. It includes AR, but also covers headsets like Meta Quest or Microsoft HoloLens – hardware that can switch modes between virtual and real. These aren’t accessories; they’re platforms. Some let you walk through walls in VR, others pin holograms to your actual desk. The difference between AR and XR starts to show here: AR keeps things light. XR wants to transport you.

Input Systems: Cameras and Sensors

Nothing’s immersive if it doesn’t react. AR uses your camera to recognize planes and surfaces – drop a couch into your room, or track a face for filters. It works. But it’s shallow. Tap and drag. Done. There’s not much depth – literally.

In XR, real-time interaction between digital and physical takes priority. So you get sensors – motion tracking, hand detection, even eye tracking. HoloLens maps your room in 3D, then lets you throw a hologram across it. Headsets track not just where you are, but how you move, where you look, what you focus on. This is where spatial computing comes in: it doesn’t just place content in space, it understands space. That shift? It’s not a UX detail – it’s the foundation of immersive user experience.

Processing Power: Processors and Batteries

The stuff under the hood matters. AR runs off mobile processors – the same chips you use to scroll Instagram or stream YouTube. They’re optimized for battery life, not intensity. That’s fine for basic overlays, but as soon as you ask for more – shadows, 3D, responsiveness – things start to stutter.

XR can’t afford that. It has to render environments in real time, process multiple sensor inputs, and sometimes handle full-scale extended reality applications – like industrial simulation or remote repair. That means serious chips: Snapdragon XR2, Apple’s R1, or custom-built silicon. But with that power comes heat. And drain. Batteries are constantly playing catch-up.

The trade-off is obvious: AR is lightweight. XR is heavier, faster, hotter. You don’t just need better hardware – you need smarter energy design. That’s the invisible war behind every XR headset.

Applications in Everyday Life

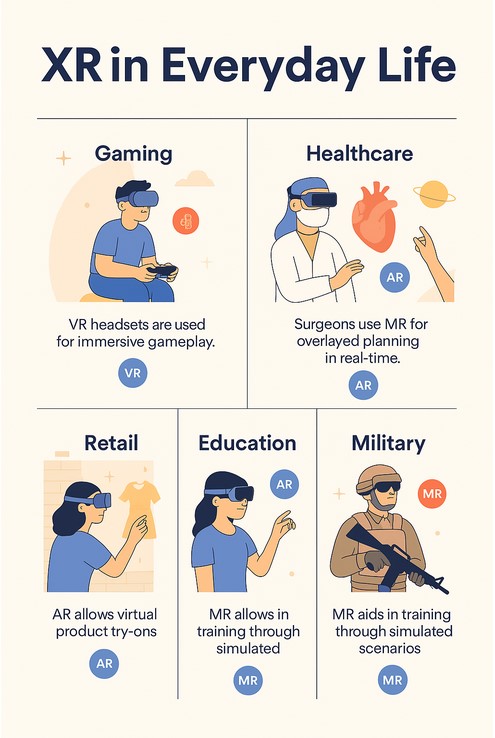

These technologies aren’t just demos – they’re already integrated into real workflows and consumer habits. Here’s where XR, AR, and MR are showing up in the wild.

Gaming

Gaming is where XR tech matured fast. VR gave players full immersion; AR made mobile spaces interactive. Now we see crossover – from location-based quests to MR escape rooms. The AR vs VR vs MR vs XR debate is loudest here, but in practice, gamers just want presence and control. Headsets deliver that, and studios are catching up.

Healthcare

Surgeons use MR to plan procedures, students train in VR, and nurses follow AR overlays during treatment. These aren’t experiments – they’re live. AR in healthcare improves outcomes by merging precision and accessibility. It also shows the difference between MR and XR in tablets – most tablets handle AR; MR still needs headset-grade power to work reliably.

Education

Digital content gets remembered better when students can move through it. That’s why AR in education is booming – from interactive anatomy models to museum tours with embedded media. When used well, XR makes abstract ideas physical. This is where the future of immersive tech is heading: tactile, spatial, real-time learning.

Retail and E-commerce

Virtual try-ons, AR product placement, digital store previews – AR in retail gives buyers confidence before checkout. XR takes this further, creating full-blown virtual showrooms. These aren’t gimmicks – they’re conversion tools. It’s a space where the comparison AR XR becomes visible: AR shows the product; XR builds the environment around it.

Industrial and Military

From remote inspections to immersive combat simulation, XR is already standard in both heavy industry and defense. Headsets offer digital overlay on real world equipment for training, repair, or targeting. It’s high-stakes tech, often with metaverse integration baked in for coordination across remote teams.

The Future of Immersive Technology

The tech isn’t futuristic anymore. It’s just unevenly distributed. XR isn’t asking if it will matter – it’s asking where it’ll hit hardest first. Here’s what’s coming fast.

Metaverse

Everyone rolled their eyes when tech CEOs started mumbling about the metaverse, but here’s the thing – it’s already creeping into workflows. Not the cartoon avatars. The infrastructure. Remote training that doesn’t suck. Product demos that feel physical. That’s metaverse integration done right. When it blends into XR apps without shouting its name, that’s when it gets real.

Remote Collaboration

Shared screens only go so far. XR makes remote feel less… remote. Instead of talking over slides, teams move through the same digital space. Marking up models. Pointing at machines. Designing in real time. This kind of spatial computing doesn’t replace meetings – it replaces miscommunication. It’s not future tech. It’s just better meetings with legs.

Digital Twins

Digital twins started as 3D models, sure – but now they’re synced to real-time data. And XR gives you a way to walk inside them. A city. A jet engine. A server farm. That’s the edge: when you can step into a system and feel how it works. The future of immersive tech isn’t graphics – it’s insight you can navigate.

AI & XR Integration

Throw AI into the mix and XR stops being a tool – it becomes a guide. Instead of tapping buttons, you look at something and the system knows what to do. It highlights. Explains. Moves with you. This is where the XR AR VR MR difference starts to fade. When machines understand space and context, XR just clicks. And that’s what XR really is – a container for possibility.

FAQ

What is the difference between AR and XR?

AR adds digital content to your real environment. XR is the umbrella term that includes AR, VR, and MR. So if you’re using AR, you’re technically using XR too.

Is XR better than AR?

Not always. XR offers more flexibility, but AR is lighter, faster, and better for casual use. It depends on the experience you want – full immersion, blended interaction, or just digital overlays.

What are examples of XR in daily life?

Trying furniture in your room using AR, attending a VR concert, or fixing machinery with MR instructions – all are examples of XR showing up in real life.

How do XR technologies affect business?

They speed up training, cut travel, improve collaboration, and help visualize complex systems. XR isn’t just cool – it saves time and money when applied right.

What’s the difference between MR and XR?

MR blends real and virtual elements that interact in real time. XR includes MR, but also AR and VR. Wondering what is XR? It’s the whole spectrum. MR is just one part of it.